Frame rates in the instance of time lapse photography can refer to two things: 1. The rate at which we are capturing images, or 2. The rate at which our images are being played back for an audience.

For example, if we are displaying a video for people to watch, they're most likely going to be watching this video in 24 or 30 frames per second. This is roughly the rate at which the human eye “refreshes”, allowing us to infer smooth motion. When we get into post production of images, and how to compose time lapse images, we will go over frame rates for final processing and how they work. For now, just know that 30 fps is a good rate for people to see motion and make smooth productions.

In the other instance, we use frame rates to explain how frequently we are going to be capturing images. The science behind time lapse can be as specific or as vague as you really want. If you're in a hurry and want to capture a few minutes of some action, you can throw a set up, start your intervalometer and let it run, and you may get a decent result. However, if you're looking to create videos that capture motion appropriately and get you the best results, you're going to want to do some more reading.

Imagine these few different scenarios:

- You're laying in the grass

watching the clouds roll by while you read a book or have a picnic.

The clouds are your focus here.

- It's 10pm, its dark out, and

you're on top of a building overlooking a city skyline with lots of

cars and pedestrians passing in the foreground. The lights are your

focus here.

- You work in a tall building in

NYC, that has a perfect view of the #1 WTC construction. You want to

shoot a lapse lasting 10 years.

Now again, for some of these scenarios, you can hook up a cheap intervalometer, press go, and maybe come up with some decent work. But the issue that stands here is that all of these types of motion are going to be take significantly different amounts of time. Let's break down the reasons behind planning for these scenarios.

- Clouds move fairly quick. If

you're patient, you can sit and watch one move from one end of your

vision to the other. Other clouds move much slower, or not at all.

Because of this, this kind of motion can be captured within a few

minutes. Something like 10 minutes worth of shooting will get you

decent results. What's most important though is that you are

shooting frames rapidly enough, so that when you put them together

they are going to translate the motion.

So for example, lets say you have 10 minutes to spare and the clouds are moving quick and the scene is just right. As a reference point, I'll just let you know roughly 5 – 6 seconds between shots is an acceptable amount of time for this kind of motion. So, some quick math:

-

10 minutes * 60 seconds = 600 seconds

600 seconds / 6 = 100 frames

100 frames / 24 fps = roughly 4 seconds of footage.

- Ok, scenario 2 begins to get more complicated. Because of it being nighttime, we need to ensure we have a fast enough shutter speed so as to avoid motion blur. The basic laws of photography still stand even though we're shooting lapses. So do your homework and make sure you have everything you need before you run out at night trying to do this. Aside from a fast shutter, we're going to have a very short interval as well. For a scene that involves cars driving by, or pedestrians moving in and out of frame, it would be beneficial to utilize a faster interval. Something along the lines of 3-4 seconds in between shots should help keep the motion smooth and allow the viewer to follow the action.

- Finally for this last scenario, we

are looking at a much much LONGER interval. If we're doing 10 years

worth of action, and progress, we have a lot of factors to consider.

First, it would be wise to get an estimate of how often visible

progress will actually be made in construction. For something like

this, you might look out your window ever single day and never see

any visible changes for a week or two. On the other hand, the

workers might hit a patch of work that flies by in comparison to

something that might take weeks or months, and may produce no

visible change. It's a situation such as this that we are going to

use an interval probably somewhere in the range of 1x per day or 1x

every 2 days. Lets look at the math:

10 years * 365 a year = 3650 days

1 shot * 3650 days = 3650 frames

3650 / 30 fps = 121 seconds = 2 minutes of footage

So, of course, in this instance, for 10 years worth of work, we are going to want longer than a 2 minute clip. At the very least, we're going to want something close to a 5 minute video. Let's figure it out backwards.

5 minutes = 300 seconds

300 * 30 fps = 9000 frames total

9000 / 3650 days = 2.5 shots a day

When you look at it on paper, it's not a difficult concept. But pack all your bags, plan your trip, haul your gear out to location, get set up, and then come to realize you have no calculations prepared, or any idea of how to estimate the shoot, and you'll be kicking yourself. Understand the relationship between frame rates, and how this affects your timing between shots, and you should be able to do the simple math in your head any time. For those of you looking to take all of the guess work out of this, there are a handful of interval calculators available for android and iPhone.

With those examples, you should have a stronger idea of how frame rates play a major part in planning your shoots. With this knowledge, you should also be starting to see the need for tools that most camera's don't come with off the shelf. While some newer digital point and shoots are starting to come with an interval mode, the fact is, most don't have them, and on top of this, any out-of-the-box mode is never going to comparable to a strong understanding of the principles.

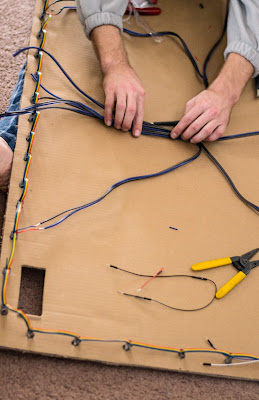

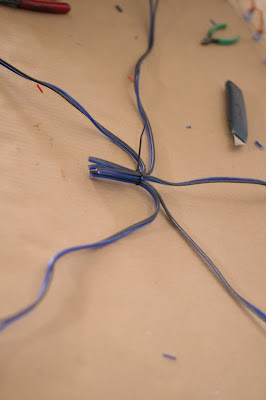

In parts 3 & 4 of this project, I'm going to go over my build and share all the things I learned along the way. My ideas weren't perfect, but they were unique and hadn't been attempted before. Some things worked great, while others failed miserably. Regardless, my goal for this project was to save a few bucks and have a working dolly for time lapse projects. In my attempt, I got a working DSLR dolly for roughly half the cost of major distributors. The next two posts will be mostly hardware related, outlining my build including a parts list and a lot of good resources for those of you working on these dolly projects.

For those of you looking for more a in depth code break down, well....we're not there yet. The controller I ended up using was an MX2 dollyengine, which already has a great deal of documentation on it. Dynamic Perception has been great about embracing open source projects, and already gives great insight in to how their product works. Perhaps one day in the future, we'll see what sorts of tinkering we can do with this thing.